How I discovered a 9.8 critical security vulnerability in ZeroMQ with mostly pure luck and my two cents about xz backdoor

If you work in the tech industry, I bet you have heard the crazy story of the XZ backdoor. Long story short, an open-source contributor known as Jia Tan contributed to the XZ library. After spending more than two years gaining the trust of the original author, that person (or persona) patched the software with layers of obfuscation and batch scripts extracted from seemingly harmless testing binary files. Eventually, a backdoor was injected into sshd, allowing the attacker to execute remote code. If you are interested in the story, you can read more about it, you can read the following articles:

The backdoor was discovered by a principal software engineer working for Microsoft who happened to be testing PostgreSQL performance and noticed abnormal CPU usage after updating his Linux system. More importantly, he looked into why that was and found that backdoor.

Tom from Tom and Jerry with title: Notices 500ms delay, sus. From this tweet originally.

Not as intensive as XZ backdoor story, but today, I would like to share my story of how I found CVE-2019-13132, a critical security vulnerability with a CVSS 3 score of 9.8 in ZeroMQ, primarily because of pure luck, and also my two cents about this xz backdoor event.

...

Read the full articleHigh-speed 10Gbps full-mesh network based on USB4 for just $47.98

As a software engineer, software is part of your job title; thus, it almost feels like you should only know software. However, in the decades of building software, I realized that gaining knowledge about hardware is equally important to learning code. Although I might never be as good as an expert in hardware, I want to expand myself beyond just software. So, I never shy away from getting my hands dirty with hardware.

To reduce the cost of my AWS cloud service, I recently decided to move some less mission-critical services into my bare-metal servers. Therefore, I got to learn how to build a bare-metal Kubernetes cluster and set up the network for it. After some research, trial, and error, I finally built and ran a relatively low-cost cluster with a high-speed full-mesh interconnected network. The most interesting part is that the networking is based on a USB4 ethernet bridge instead of a conventional ethernet switch and cables. I tested the network speed, and it can hit 11Gbps. The cost of making the network is only $47.98 USD! Today, I would like to share my experience of building it.

The UM790 Pro bare-metal cluster with full-mesh USB4 cables connections

...

Read the full articleWhy I built a self-serving advertisement solution for myself

Orignally posted in PolisNetwork’s blog

Selling a product is never easy. As an experienced software engineer myself, building a software product is much easier than selling it. Actually, I can build and maintain multiple ones simultaneously, but it will only do good if I find customers interested in using them and paying for them. Therefore, marketing plays a key role here to success.

There are many ways to market. Buying ads sounds like an easy solution for early customer acquisition, but it has problems. Here are some of the issues:

- It could be a substantial financial burden for early-stage products to keep burning cash before making any money.

- You need to learn how to operate ads efficiently. That requires some trial and error, which can be stressful under financial burden.

- Click fraud is hard to avoid. It would be hard to tell how much of your money is going to it.

- Most ad networks out there raise serious privacy concerns, and more and more people are installing ad blockers to protect their privacy.

With these in mind, I’m not too fond of buying ads for customer acquisition for my products. Certainly, buying ads has some pros, but I like approaches that can grow organic traffic in the long run. Building useful free online tools can also bring organic traffic in the long run. Or content marketing is also one of them.

For example, I made the online Beancount formatter tool for selling BeanHub. Another example is that I built Avataaars Generator in 2017 as a hobby project, which became very popular. If you search “avatar generator,” it shows as the number one place in the search result in Google:

It brings in some organic traffic every day without spending a single penny.

...

Read the full articleWhy and how I build and maintain multiple products simultaneously

Building a single successful software product is hard. Building multiple ones at the same time is extremely hard. Most people would suggest you should focus on only one at a time.

But is that the only way?

I run a small startup company, Launch Platform. As the name implies, it’s a platform for launching innovative software products. So far, I have launched and am maintaining two products:

- Monoline - a messenger-like app but only for sending self a note

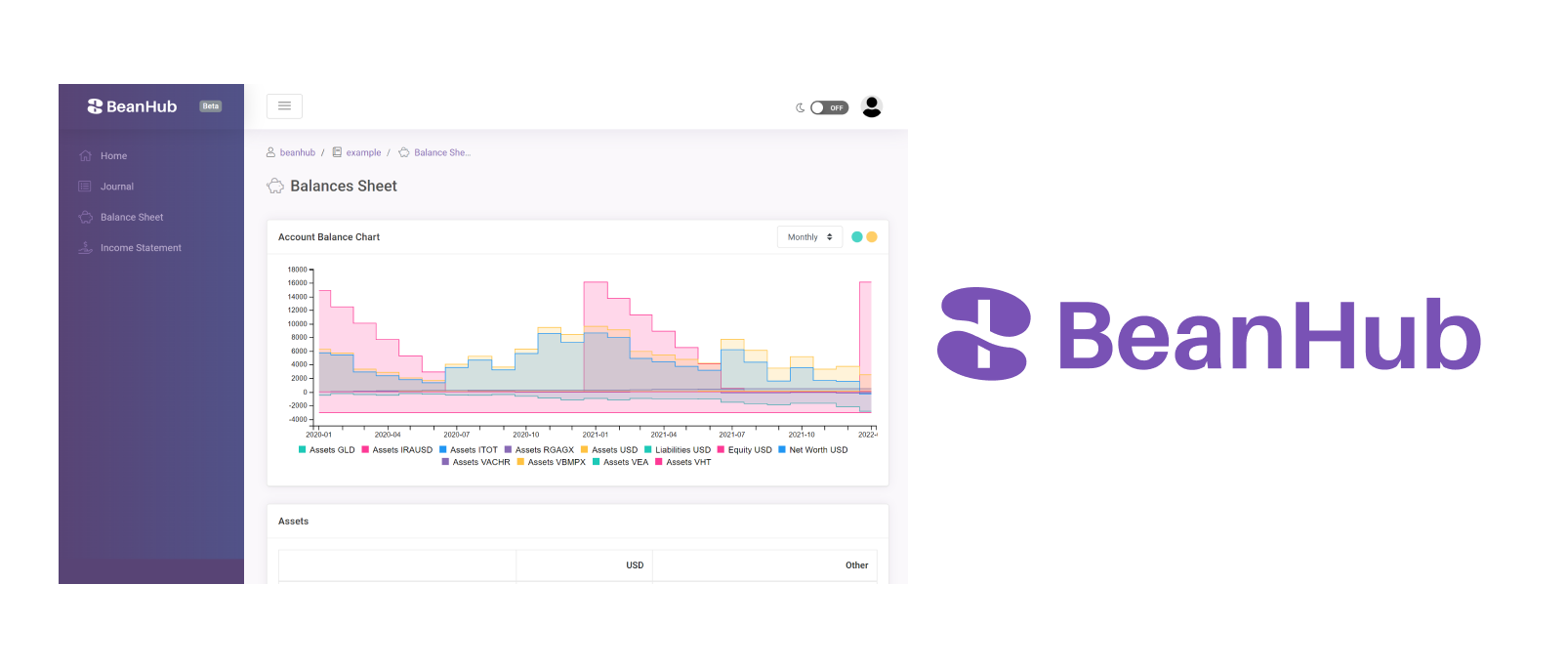

- BeanHub - a beancount text-based accounting book based on git

Is there a good reason to build multiple ones instead of just one simultaneously?

You may ask.

Personally, I think while it’s not for everyone, the answer is a yes for me. Recently Monoline finally got its first paying customer. While it may not sound like a big deal, it is a big milestone for me. It validates the needs and proves that people are willing to pay for the product. Today I would like to share the reasons and my experience building, launching, and maintaining multiple products simultaneously.

...

Read the full article